Autonomous Software Engineers: Not Quite There …. Yet

26-04-2024

During the past few weeks, we’ve all seen the meteoric rise and “fall” ¹ of Devin, the first AI software engineer. This begs the question, will AI be capable of replacing software engineers in the near future?

We’ll take a deeper dive into this question, how we are testing some of these ideas at Blar, and what could possibly lie ahead in the future

Blar

For our new readers, I’ll quickly introduce Blar.

At Blar, we are obsessed with structuring data in such a manner that autonomous AI agents can quickly traverse said data and retrieve the most important information needed to complete a task ².

So, how exactly do we achieve this?

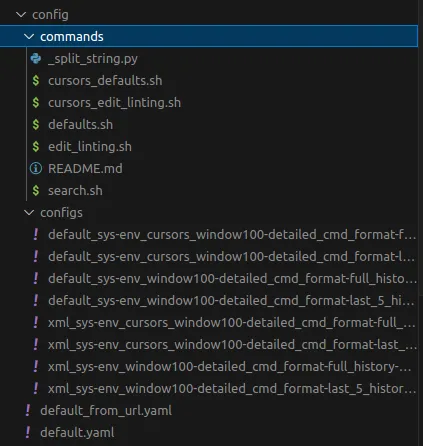

Our current solution relies heavily on graphs. Graphs consist of nodes and the edges between nodes. This structure helps us model not only data points (nodes), but also relationships between these data points (edges). This way, we can start an AI agent at any given data point and let it autonomously hop between different nodes via the edges. Think of it like your operating system, where you can open folders and files by clicking them — but in this case, it’s data!

A simple File System with folders and files inside

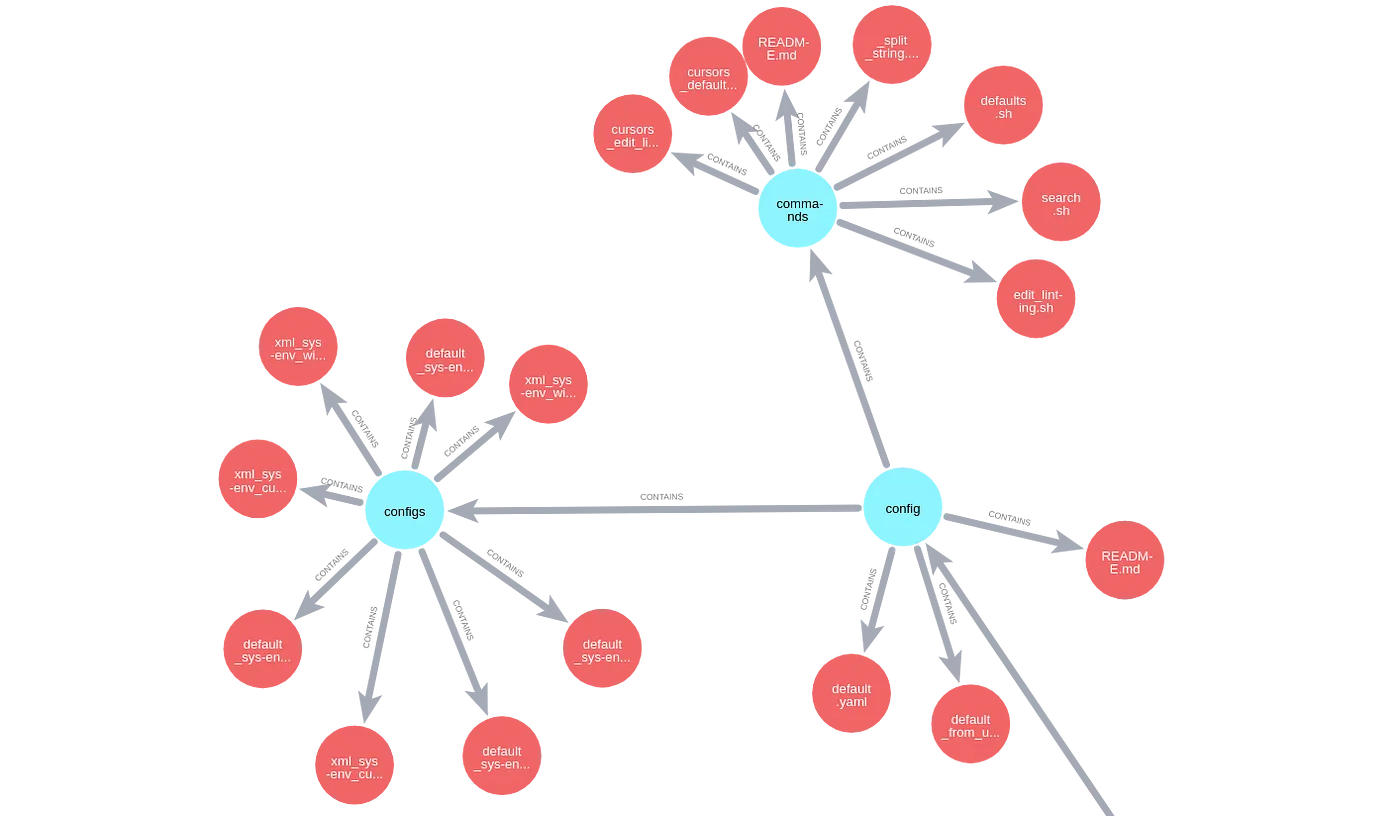

Image depicting a graph of a file system Light blue nodes are Folders and Red nodes are files

So what does this have to do with code and Devin?

Recently, we’ve been exploring how graphs can help us model complete code bases.

As mentioned, a file system is a clear example of a graph; folders can contain files and other folders. What’s particularly interesting in code is that you also have files that import other files. You can go into even further detail: files that contain classes, classes that contain functions, and even functions that invoke other functions. It’s all connected.

Visual depiction of how it feels working with graphs

To achieve this, we used a combination of Tree-sitter with Llama Index, as well as a bit of our code. Check out our GitHub repo. We also utilize Neo4j to save and visualize the data.

A representation of a Code Base with our graph model

After modelling code bases as graphs, we wanted to mount an agent to see if it was capable of traversing the graph. Our first use case was a debugger agent, we described a bug and gave it a starting point.

For example, “My code does not distinguish between directory nodes and it creates multiple connections between them, the starting node is run.py where I executed the code”. This way our agent would start from that node, analyze the code, see what classes were defined, what functions were called, and then it would hop to the next most relevant node and do the same. Here’s a video demonstration.

SWE-Bench

Next, we sought to test our agent and benchmark it against alternatives in the market.

Through this process, we came upon SWE-Bench. This benchmark consists of a series of issues posted in open-source repos, and the goal is to generate a patch that fixes the issue. This is the same benchmark Devin used. At first glance, it seemed like the perfect benchmark, but digging deeper into it we realized we had to produce a ton of code just to be able to run the benchmark. We needed to create a Docker container, emulate the same environment (package versions, etc), download the repos, check out to the correct commit, create the graph, traverse the graph, give the agent the ability to edit code, execute tests, etc.

Lucky for us, a few days later the team behind SWE-Bench released an open-source agent that did everything we needed. Our next objective was to adapt the agent to use Blar to navigate the code.

Guess what, that turned out to be easier said than done. We had to understand a whole code base to adapt it correctly, it was quite overwhelming at first glance. That was until Pato (Blar’s CEO) suggested: “Why don’t we use our agent to traverse the code and help us look for places where we should modify the code?”. Aha! Therefore, we generated the graph of the SWE-Agent repo ³ and asked our agent for help, it worked quite well ⁴, saving us hours of code searching. As a result, we managed to start our first tests in just a couple of days.

Results

We ran the new SWE-Blar-Agent within the dev split of the SWE-Bench LITE ⁵. Not only did we manage to resolve 5.5% more questions than the native approach, we also managed to save ~10% on the cost of prompting GPT4. Despite hammering our way into integrating Blar, we achieved improved results as many of the prompts could be further optimized and the tools could be tweaked for a better integration.

Let’s dig deeper into the results, shall we? Out of 18 questions, ⁶ the original agent managed to solve 3 questions while our agent solved 4. Cheaper with an additional question answered correctly, good but not great.

For context, Devin managed to solve 13.84% out of 300 questions. See, context is everything ;)

If we dive deeper, we can measure how many times the agent modified the correct file. To compare this we took the gold patch, extracted the modified files, and compared it with the patch our model suggested. We removed all the test files created by the agents to make the comparison. Running this comparison we found that the original agent modified 77.27% of the files correctly, while the SWE + Blar Agent modified 89.47% ⁷ of the files correctly.

Lessons

So what are the key takeaways from all of this?

Firstly, one thing that remains clear is that autonomous AI agents are not currently capable of replacing human software engineers. A 14% accuracy in correctly resolving issues is not a good metric, and it’s unlikely that using the current technology this metric will improve much.

What is interesting though is that the agent has a 90% accuracy hit rate in identifying the correct file to modify. Current agents are very good at navigating through code, comprehending it, and seeing where the possible bug might be lying; translation, a huge potential time saver for software engineers in finding a good starting point to identify the cause of a problem.

So if AI is so good at identifying where to modify the code, why does it do an awful job at modifying it?

Here’s where human ability comes into play. Being a software engineer is not about finding the location of the bug, it’s about comprehending what’s causing the bug’s behaviour and what is the best solution to fix said behaviour.

A quick example…

When we were coding the graph constructor, I encountered a bug when parsing files. A certain function would return None causing a “TypeError: 'NoneType' object is not subscriptable”, so I asked our agent to help me debug this problem. Its solution was to simply include a line that looked something like this:

Although this indeed fixes the bug and enables the code to run correctly, I wanted to parse the file, not simply skip it with a return. The bug was much deeper than merely skipping the problematic files. I wanted to find the root cause so that every file would parse correctly.

If we let AI autonomously debug our code we’ll end up with a bunch of code that runs but doesn’t work.

So what is the difference between a software engineer, like me, and AI?

I believe it’s the amount of context that I possess that the AI is missing.

I know what my code is about, what it is supposed to do, and the reason why I wrote it. The AI on the other hand was just presented with a bug, a bit of context on what the code is supposed to do, and asked to do the best it could to fix the problem.

Imagine if you were asked to fix a bug within a code base you have never seen, you have never worked on, and whose purpose you do not understand. You will probably “fix” the bug and manage to get the code running, but was said fix the intended resolution? Probably not.

Future Directions

So is this the end? AI will never be capable of replacing software engineers?

I strongly believe that AI will be capable of replacing some software engineering jobs in the short-term, jobs like debugging, writing test files, documenting, and maybe creating some simple functionalities.

It’s inevitable that LLMs will improve their capabilities in reasoning, context management, and logical thinking. As Sam Altman says, “These are the stupidest the models will ever be”.

What AI is currently missing is context, I believe context is all you need ⁸.

We need to find a way to not only help AI understand code, but also help it understand the purpose of the code, bridge the gap between code logic, and business logic. Meaning, how a modification in a file does not only impact the code, but also how the modification impacts the business or application said code is running on as well.

SWE-Agent code repo visualized in a different way using yFiles library

At Blar, we believe graphs will help us bridge this gap. Currently, our nodes contain only code information like the code itself, the position in a file, the path, etc. This acts like an operating system, and we could see the effect within the small improvement our solution achieved compared to using a Docker running a whole OS. We are working on ways to integrate documentation and business logic that help the AI agent understand why the code is there, the impact of changing some of the code, the different workflows in the code, and how we can help LLMs better understand the code base as a whole.

We are also working on ways to enrich the nodes with information each time an agent passes through a node. When traversing the graph, the agent is asked for “thoughts”, which we can leverage by saving them in the node. This serves both as documentation for the node and as long-term memory for future agents⁹. We want to be ready for when GPT-5 launches, so Sam what are you waiting for? ;)

Fall is a bit dramatic just a couple of videos criticizing a few aspects of it. I have nothing but admiration for the team behind Devin.

Easier said than done :/

The Code Base image is actually the graph representation of SWE-Agent Repo :)

The only limitation it had is that it only processed .py code and some changes required to modify .sh and .yml code

It’s surprisingly expensive to run costing around 60 USD to run just 23 questions.

2 of the 23 original questions failed to execute the evaluation. 3 Questions had problems with the docker environment

These percentages are out of the times the agent actually modified a file, because of implementation issues sometimes the agent would not modify any file apart from the test file it generated

Had to use this cliché phrase I’m sorry.

No token shall be wasted!!